My goal here is to describe my research portfolio as succinctly as possible. If you are interested in details of individual projects, please check out the section “Projects”.

Research

Primary Research:

I only list those plots, images, and animations that were created by me as part of my research, in software including (but not limited to) R, Python and Matlab. If you decide to use any image as a part of a publication, please cite my corresponding peer-reviewed paper.

Overview

My primary research involves large-scale network analysis. Specifically, I am interested to develop novel statistical network models based on Exponential family Random Graph Models (ERGM’s). These models could be adapted to several different types of networks like Binary, Weighted, Dynamic, Bipartite etc. The applications include modeling dynamically evolving social networks, political networks, trade networks, email networks, water pollution networks, product review networks etc.

To scale up for large networks, inference in these models is based on Variational EM algorithms. I code these algorithms with a combination of statistical language `R' and `C++'. Compared to naïve R code, my implementation via RcppArmadillo boosts the speed by at least 50 times.

Time-Evolving Community Detection

In one of the projects, I have developed an integrated approach to cluster dynamic networks while simultaneously detecting temporally evolving latent block structure. In simple words, using this state of the art novel approach, we can detect time evolving communities. This has huge applications in modeling community evolution in social networks, trade networks, and email networks. Analyzing one of the very popular International Trade Network dataset, I found out that certain Government policies like trade liberalization and joining Mercosur had positive effects in moving Brazil from a less dense cluster to a well-connected community.

Time Evolving Community Detection in International Trade Network

Non-Parametric Clustering of Weighted Networks

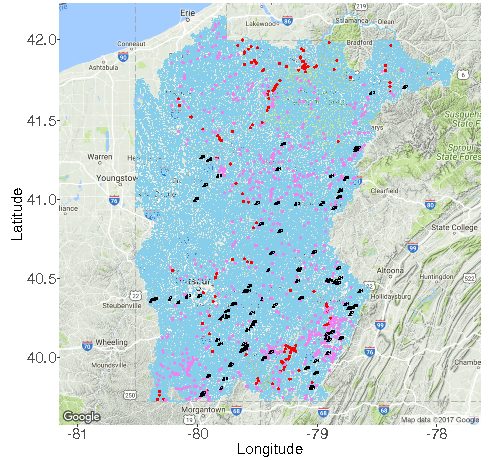

In another project, I proposed a novel nonparametric kernel local likelihood approach to cluster and analyze weighted networks. This method has enormous environmental applications. For example, water pollution is a huge problem in the rivers of Pennsylvania and has adverse impacts on human health. In these river networks, it is an important research question to detect “hubs” of polluter sources that may be spatially far apart but still behave in similar ways with respect to adverse effects on the environment. Our novel approach uniquely clusters such hubs while taking into account the irregularly sampled concentrations of pollutants.

Non-Parametrically Clustered Weighted River Network

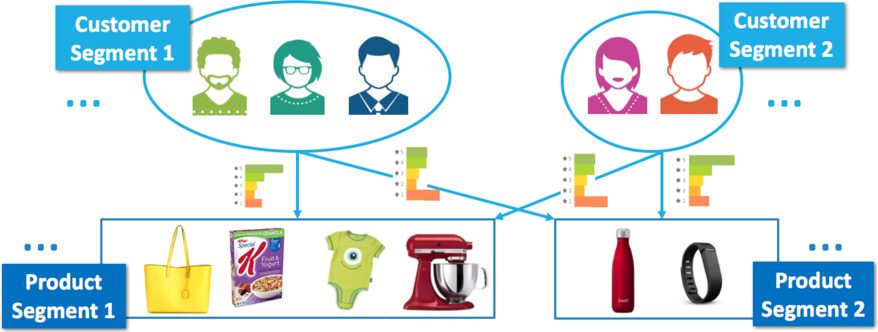

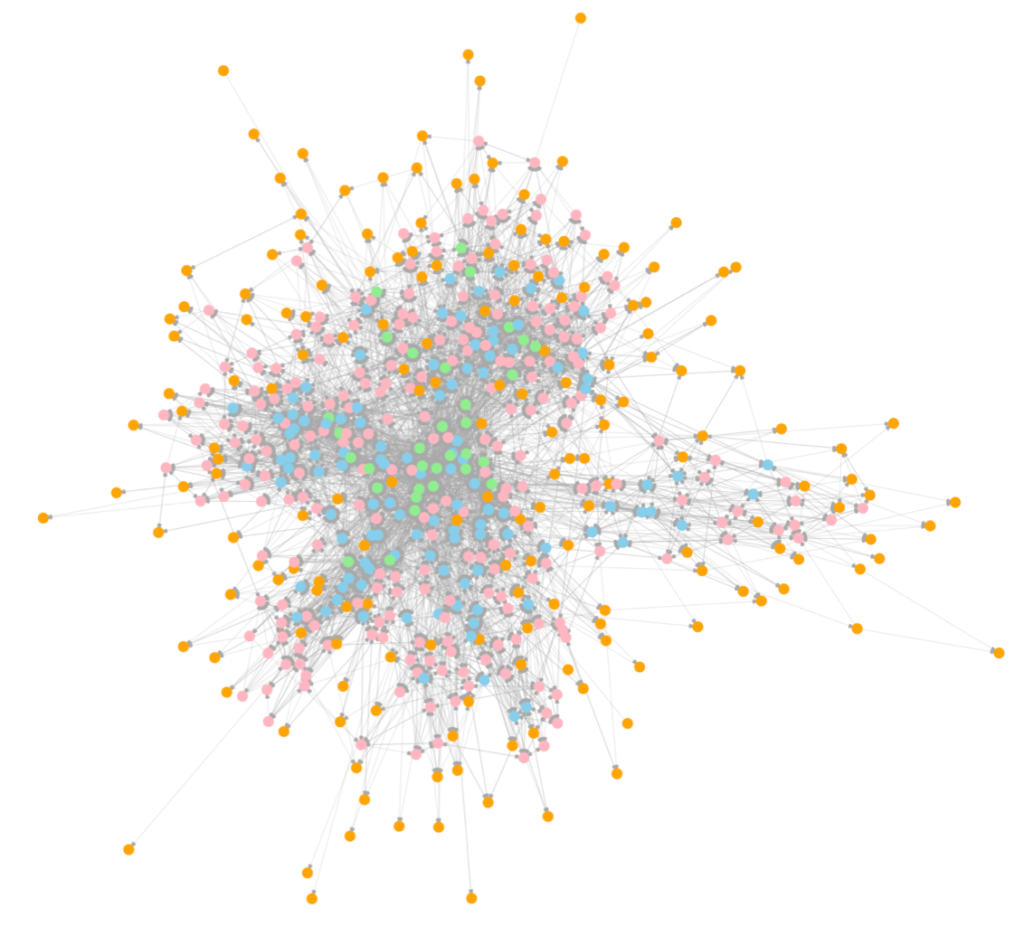

Detecting “fake” reviewers in product-review Bi-Partite Networks

I have also been interested in the business applications of these network models. In my third project, I have been exploring bipartite networks which comprise two (or more) disjoint sets of nodes interacting with each other. These type of networks occur very frequently in product review data. For example, we could have a consumer-product network where people buy products on an eCommerce website (like Amazon) and give them reviews. An important research question in this regard is to detect fake reviewers.

Generally, the consumer-product networks are quite sparse which is both good and bad. The good part is scaling up becomes easier by using sparse data structures. However, the bad part is it induces higher standard errors in parameter estimates.

Two Mode Segmentation in Product-Review Network

To scale up for large-scale networks with hundreds of nodes, the inference in these network models is based on Variational EM algorithm adapted explicitly for each model. Besides this, I have been designing Stochastic optimization techniques that can scale up these algorithms for networks with millions of nodes.

Scale up using Stochastic Variational EM

In a recent notable breakthrough (May 2018), I was successful to integrate the stochastic methods with Variational EM Algorithms. This novel SVEM algorithm scales up the inference to millions of nodes with thousands of clusters while taking just a few hours to converge. Currently, I am analyzing Amazon’s consumer product review network with ~10K reviewers and ~8K products. These algorithms have enormous potential to scale up even further.

Collaborative research:

Geo-scientific Applications in River Networks

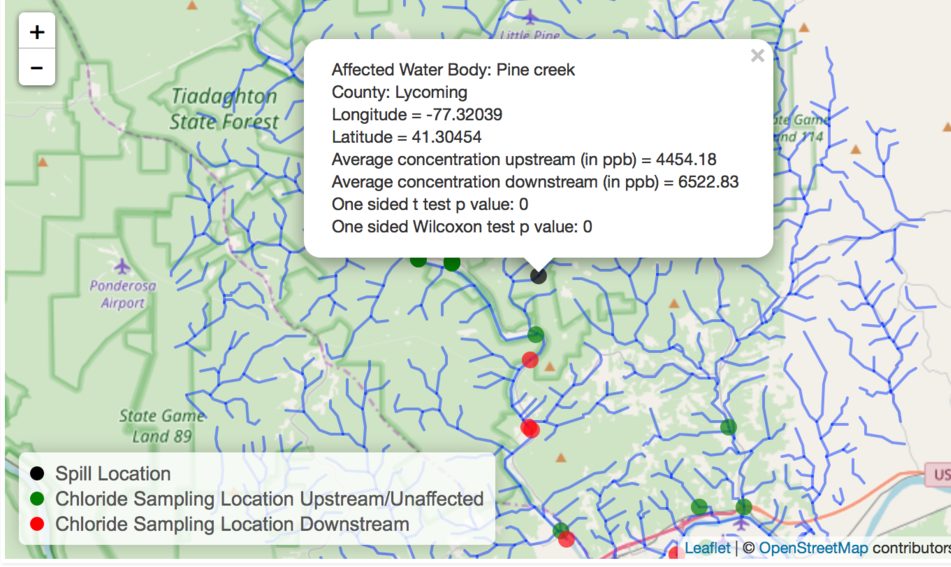

As part of my collaboration with Geoscience department at Pennstate, I have developed an automated novel statistical methodology to detect polluter sources in river networks. I implemented this approach as an open source application GeoNet in R Shiny that can allow researchers to analyze “big” water pollution data with sampling sites over 20K and deduce the significant polluter sources based on multiple parametric/non-parametric statistical tests.

GeoNet detecting a significant spill location

Analysis of “big” chromosome data

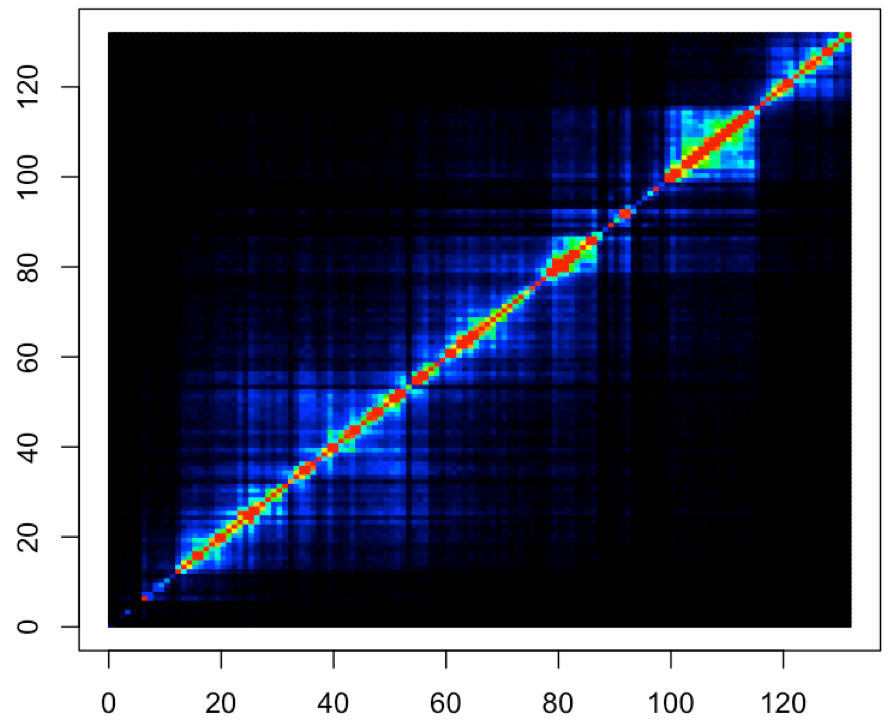

In a recent collaboration with BioStatistics Department at Pennstate, I have been analyzing chromosome data collected using state of the art Hi-C method to study three-dimensional architecture of genomes. Investigating such datasets have important applications in precision medicine. However, the data is quite big. To give you an idea of the scale, one of the matrices is 25K*25K and after some data wrangling, I transformed this into a 10M*25K data-frame. Right now I am trying out a constrained penalized LASSO regression model to do variable selection over this processed data. There are several challenges to store the design and time to fit the model. However, the initial results are quite promising and match up with recently published literature to detect Topologically Associating Domains (TADs). More on this soon. Stay tuned.

Recent Research Topics:

Currently I am exploring scientific literature from following topics:

- Non-Convex Optimization using Alternating Direction Method of Multipliers (ADMM).

- Auto Machine Learning using Recurrent Neural Networks.

Primary Research Interests

- Large scale network analysis

- Statistical Machine Learning

- Variational Inference

- Stochastic Optimization

- Bayesian Analysis and MCMC algorithms

- Parallel Computing and Visualization

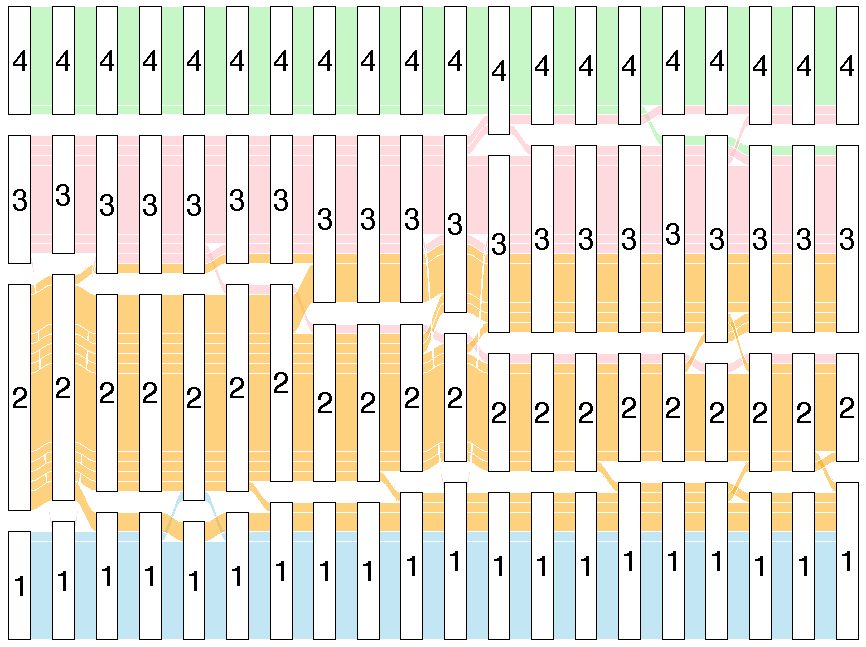

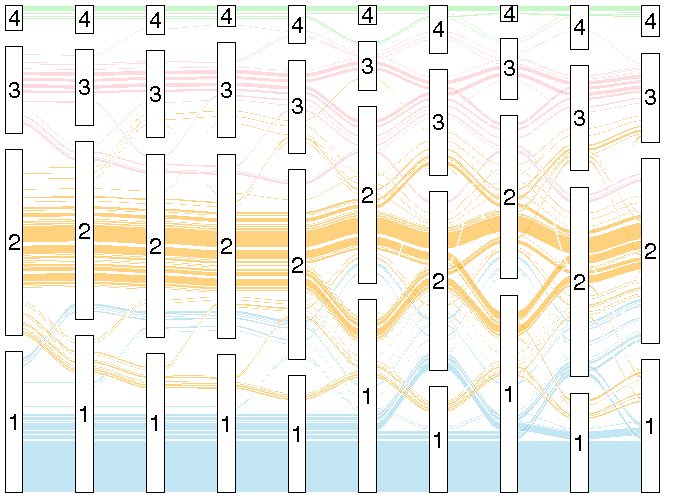

Alluvial Plot to visualize the time evolving communities

Brazil's Trade Network

Email Network in a large EU Institution

Alluvial plot for Email Network

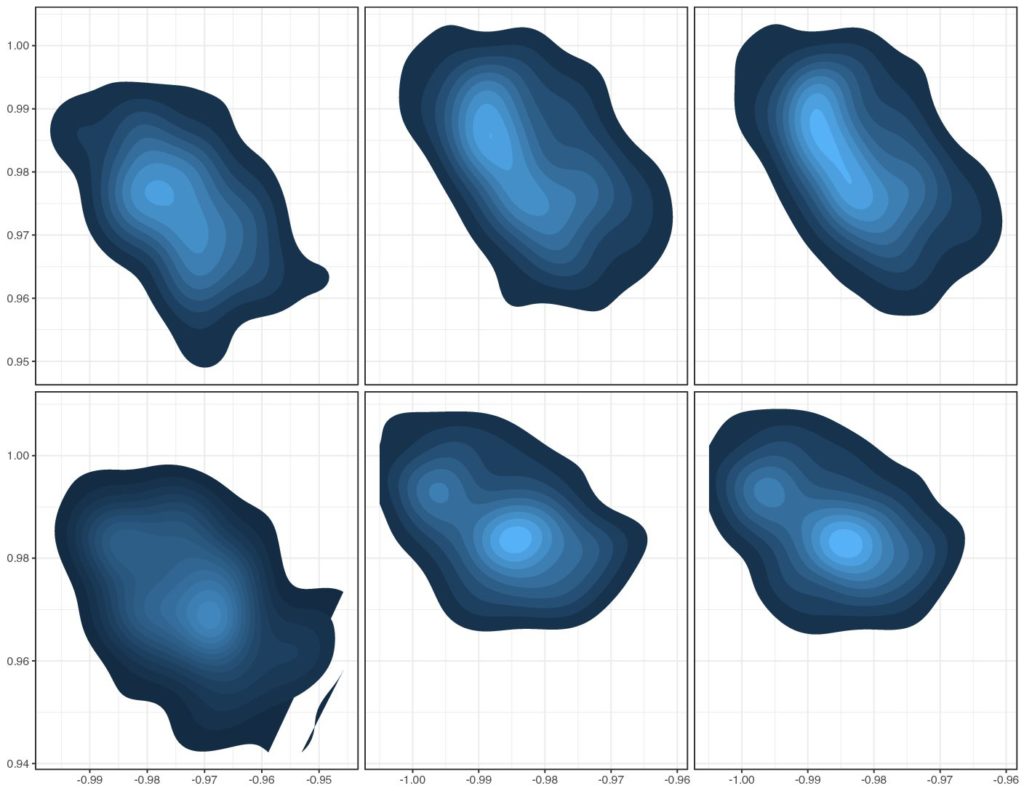

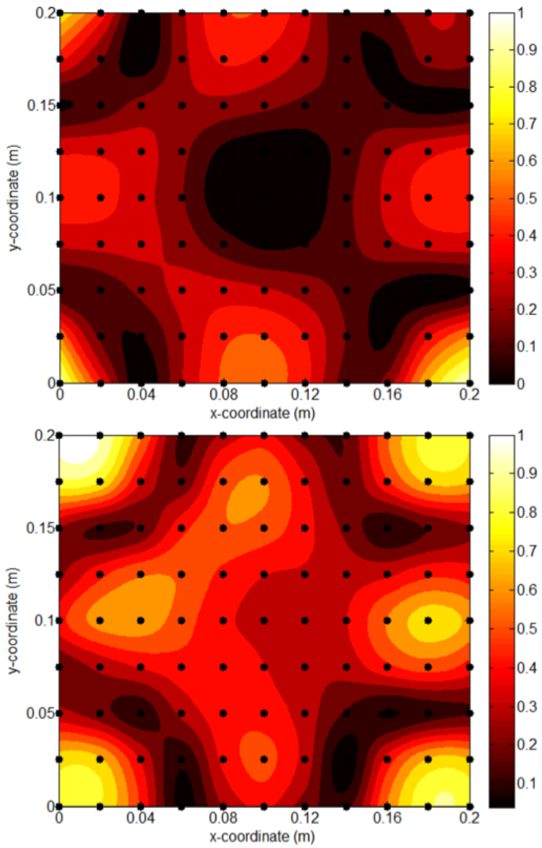

High Fidelity Simulations

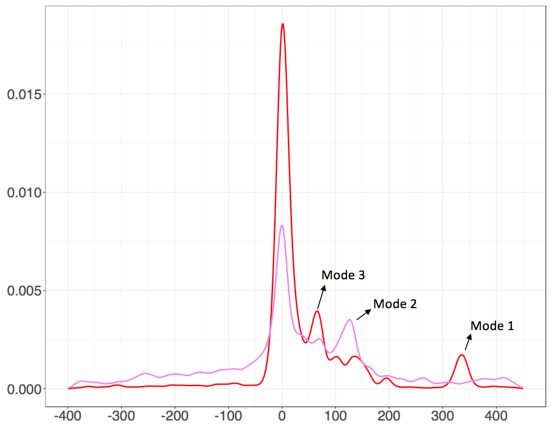

Non-parametrically estimated densities

Collaborative Research Interests

- Network Applications in Environmental Big Data

- BioStatistical Analysis of Hi-C Data

- Building Data Visualization tools using R Shiny and Leaflet

Chromosome Interaction Intensity Matrix

Acoustic Reconstruction

Recent Research Interests

- Non-Convex Optimization using ADMM

- Auto ML using Recurrent Neural Networks

Advisors and Collaborators

Wayne Desarbo

Mary Jean and Frank P. Smeal Distinguished Professor of Marketing

Smeal College of Business, Pennstate Follow

Zhenhui Li

Associate professor

College of Information Sciences and Technology, Pennstate Personal Website

Qian Chen

Ph.D. Candidate in Marketing

Smeal College of Business, Pennstate

Duncan Fong

Professor of Marketing and Statistics

Smeal College of Business, PennstateGreat advisors and collaborators!

Since coming to Pennstate, I have had the unique opportunity to work with leading Statistics and Machine scientists (Dr. Lingzhou Xue and Dr. Kevin Lee), geoscientists (Dr. Susan Brantley and Dr. Tao Wen), Bio-Statistics researchers (Dr. Yu Zhang), researchers from College of Information Sciences and Technology (Dr. Zhenhui Li) and researchers from Smeal College of Business (Dr Wayne Desarbo, Dr. Duncan Fong and Qian Chen).